CPU vs GPU for deep learning

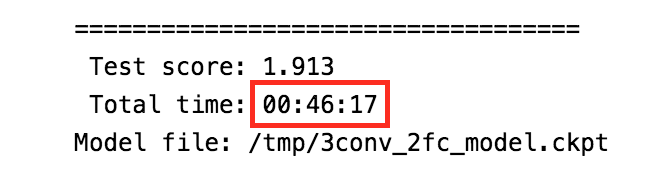

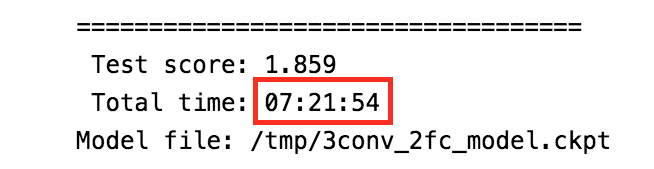

If anyone is wondering why would you need to use AWS for machine learning after reading this post, here’s a real example. I’ve tried training the same model with the same data on CPU of my MacBook Pro (2.5 GHz Intel Core i7) and GPU of a AWS instance (g2.2xlarge).

|

|

GPU-enabled AWS instance (g2.2xlarge). |

MacBook Pro CPU (2.5 GHz Intel Core i7) |

The model here is a CNN with 3 convolutional layers and 2 fully connected layers, implemented with TensorFlow. It was trained on ~1500 grayscale 96x96 images in batches of 36 for 1000 epochs. As you see, in this case GPU was approximately 10 times faster to train than a CPU. That’s a huge difference!

To be fair, if I could use my MacBook Pro’s GPU, the results might have been roughly in the same ballpark. Unfortunately it’s equipped with AMD GPU that has a OpenCL interface, and TensorFlow is yet to support OpenCL.

Leave a Comment